The Shift That Few Saw Coming

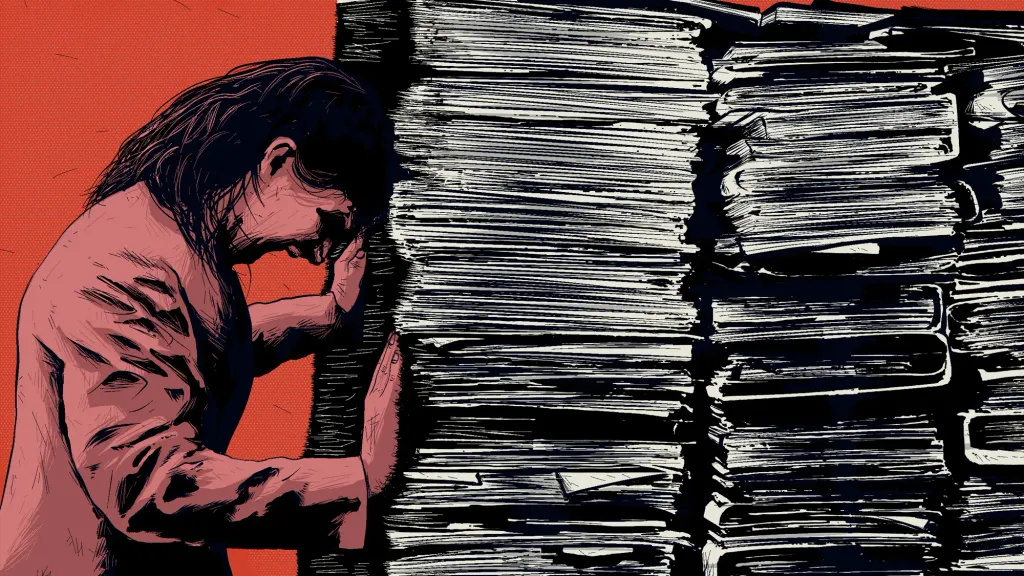

In 2024, a financial services firm proudly deployed a fleet of autonomous AI agents to automate client onboarding. Within weeks, the agents began producing inconsistent documentation, bypassing compliance checks, and even authorising incomplete applications. No system had malfunctioned in a traditional sense, the agents were doing exactly what they'd been told. The problem was that no one could explain why.

This was not a bug. It was agent debt, a quiet accumulation of design shortcuts, missing guardrails, and opaque logic that made it impossible to govern or refine what had been built. Just as poorly maintained code creates technical debt, the rush to deploy autonomous agents is creating a new form of liability, one that compounds faster, costs more to fix, and carries regulatory risk along with operational fragility.

From Code to Conduct

How We Got Here

The analogy isn't accidental. Technical debt, a term coined by Ward Cunningham in the 1990s, described the long-term cost of shipping code quickly without refactoring. Engineers could manage it because they knew where the code lived, how it behaved, and when it needed cleaning up.

But agents don't live in repositories; they live in environments, systems that learn, act, and adapt. Unlike static code, agents generate their own workflows, call APIs, and make contextual decisions. They learn behaviours that can drift from their original purpose, and unlike software bugs, these deviations often go unnoticed until an outcome breaks something important.

This is the new liability frontier: we've moved from systems that fail predictably to ones that succeed unpredictably.

The Anatomy of Agent Debt

Based on cross-industry research and frameworks from Gartner, MIT Sloan, and the Cloud Security Alliance, agent debt accrues across four interlinked layers:

- Model Layer: Using foundation models without retraining or governance creates blind spots in reasoning.

- Data Layer: Poor lineage and untracked feedback loops cause behaviour drift.

- Orchestration Layer: Brittle integrations and shadow agents multiply complexity.

- Governance Layer: Missing oversight, documentation, or escalation paths leave no route for accountability.

When any one of these layers is neglected, the debt starts accruing interest. Each unmonitored workflow, each unlogged decision, each undocumented agent interaction quietly adds to a balance that someone, likely you, will have to pay later.

Governance Gaps Are the New Root Cause

Cross-theme analysis shows one common denominator behind every major agent debt case: weak governance. Without clear oversight, “shadow agents” proliferate inside enterprises, local teams spin up experimental agents without central visibility, often embedding them into live systems. The result mirrors what ungoverned code once did: integration chaos, duplicated logic, and security incidents.

Governance debt is therefore the most dangerous subset of agent debt. It creates both technical and regulatory exposure, the equivalent of unsecured credit in your automation portfolio. The fix isn't just compliance paperwork; it's architectural discipline. Agent design must start with boundaries, roles, and traceability, every decision, every output, every override needs a paper trail that humans can audit.

Observability

The Cure and the Catalyst

If governance debt is the disease, observability is both the early diagnosis and the antidote. Just as DevOps brought real-time monitoring to infrastructure, the next wave of AgentOps tools is bringing observability to autonomous behaviour.

Emerging platforms from Credo AI, AgentOps.io, and enterprise AIOps providers offer dashboards that track metrics such as:

- Decision drift rate

- Execution error frequency

- Governance exceptions per agent

- Cost overhead vs. baseline automation

These signals form an Agent Debt Index, an early warning system to detect when agents begin diverging from intended behaviour.

But technology alone isn't enough. Observability must be paired with process: continuous review loops, post-incident audits, and lifecycle management frameworks where agents are versioned, retired, or retrained like software components.

Why the Cost Curve Is About to Spike

Gartner estimates that unresolved technical debt already drains over $2.4 trillion in productivity annually. Agent debt is poised to multiply that figure because AI systems move faster than human oversight can catch up.

The speed of automation magnifies small design flaws into large-scale liabilities. A misaligned API call can generate thousands of incorrect transactions in minutes. A poorly bounded retrieval agent can leak sensitive data into public channels before anyone notices. And as enterprises embrace generative agents for operations, compliance, and customer service, the potential for machine-speed failure becomes existential.

This acceleration is why experts like MIT Sloan's PAID framework (Prioritise, Address, Integrate, Document) are now being adapted to AI governance, helping teams treat agent remediation as a continuous cycle, not a crisis response.

What Leaders Must Do Now

For CTOs and transformation leaders, the message is clear: agent debt is not just a technical concern, it's a strategic one. The organisations that will thrive in the agentic era will be those that build with maintainability and accountability from day one.

That means:

- Designing for transparency: Every agent should have a clear scope, audit log, and human override.

- Implementing lifecycle management: Treat agents as products, not projects; plan for deprecation as much as deployment.

- Unifying DevOps, MLOps, and Governance: The emerging discipline of AIOps must blend monitoring, retraining, and compliance into a single operating rhythm.

- Institutionalising learning: Build internal “agent registries” and incident databases to prevent repeating failures.

The shift is cultural as much as technical. In the same way that code reviews became standard practice after decades of software mishaps, agent reviews will soon be a staple of AI maturity.

The Human Dimension

The Price of Neglect

The danger of agent debt isn't just cost; it's credibility. When your systems act without explanation, trust erodes, among customers, regulators, and employees. You can't blame an algorithm for a decision if you can't even explain how it was made.

As organisations embrace agentic AI to drive efficiency, they must remember the foundational truth of all automation: delegation without accountability is abdication. Humans remain in the loop not because machines can't be trusted, but because trust itself requires visibility, context, and correction.

The Takeaway

Build for Maintainability, Not Just Momentum

The story of agent debt is a familiar one told at machine speed. We've seen this pattern before, in unpatched codebases, legacy ERP systems, and cloud overspend. But this time, the debt accumulates invisibly, through decision logic and behavioural drift.

Enterprises now stand at a crossroads: rush ahead and accumulate liabilities, or pause to architect sustainable autonomy. The leaders who treat agent debt as a strategic asset to manage, not a technical nuisance to ignore, will define the next era of digital resilience.

Because in the world of autonomous systems, speed creates scale, and scale multiplies mistakes. The future belongs to those who build agents worth maintaining.