Opening Scene

The Moment the Acronym Broke

When OpenAI released GPT-4, “LLM” became the shorthand for intelligence itself. Every AI demo, every funding pitch, every corporate strategy deck, all spoke the same three letters. But by 2025, that linguistic convenience became a liability. Inside labs and boardrooms, a quiet realisation has taken hold: not every AI worth building is a language model.

Something deeper is happening beneath the surface hype, a shift from a single, monolithic idea of intelligence to a diverse ecosystem of specialised models. This is the year AI stopped being one thing and started becoming a system of many.

The Insight

What's Really Happening

For the last five years, the AI race has been defined by scale. Bigger models meant better performance. But that linear logic is breaking down. The cost, compute, and carbon footprint of training trillion-parameter systems have forced a rethink.

In their place, a new AI taxonomy is emerging, seven model archetypes, each tuned to a specific role:

- LLMs (Large Language Models) still form the conversational and reasoning layer, the storytellers and synthesisers of the AI world.

- LAMs (Language Action Models) move beyond talk into execution, linking natural language to automation tools and APIs.

- SLMs (Small Language Models) miniaturise intelligence for edge and on-device use, balancing privacy and performance.

- MoEs (Mixture-of-Experts) distribute intelligence across specialist sub-models, activating only the experts needed for each query, an efficiency breakthrough.

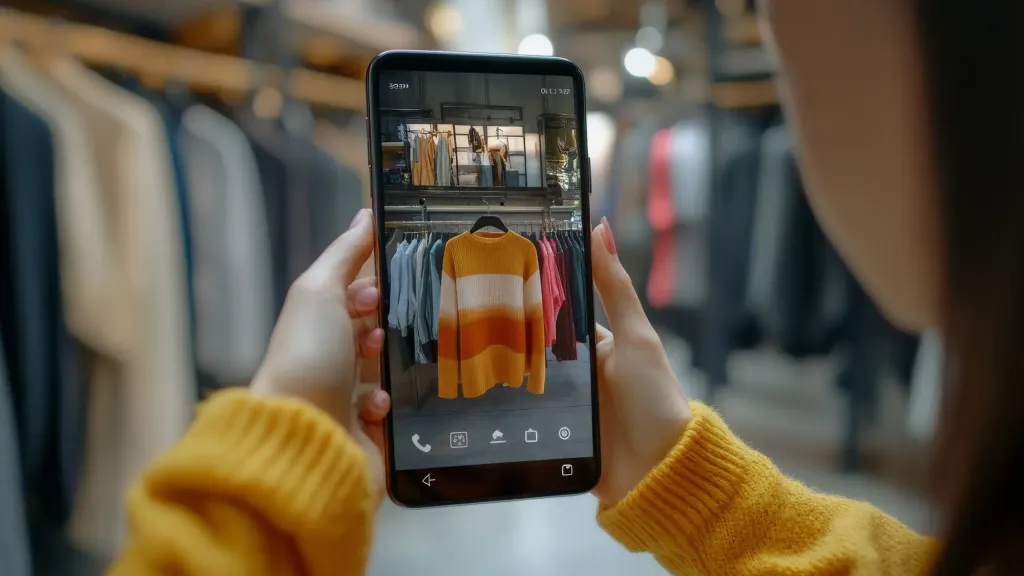

- VLMs (Vision-Language Models) merge sight and speech, letting systems interpret the world visually and linguistically.

- SAMs (Segment Anything Models) redefine computer vision by treating segmentation as a solved, universal layer.

- LCMs (Low-Compute Models, or lightweight controllers) handle orchestration, managing how other models talk to each other.

Together, these architectures form a multi-model economy, where precision, not power, defines advantage.

The Strategic Shift

Why It Matters for Business

According to McKinsey's State of AI 2025 and Stanford's AI Index, more than half of enterprises now use multiple model types in parallel. A retailer might deploy a VLM to recognise products, a LAM to manage stock updates, and an SLM to handle in-store queries offline. A manufacturer could pair SAMs for visual inspection with MoEs in the cloud for predictive analytics.

The implication is profound: AI strategy is becoming architecture strategy. Choosing the right model type is now as fundamental as choosing the right database or cloud provider. Use an LLM where an SLM would suffice, and you waste both budget and energy. Deploy a LAM without proper governance, and you risk automating errors at scale.

This diversification also reflects an ethical and operational maturity. Regulators like the EU and OECD now recognise distinctions between model classes, from foundation models to high-risk operational ones. Governance can finally evolve beyond vague “AI ethics” into precise accountability: visual bias in VLMs is not the same as hallucination in LLMs or faulty actions in LAMs.

Efficiency and sustainability have become first-class business metrics. Google's Gemini 1.5, Anthropic's Mixtral-based MoE, and Apple's on-device 3B model all point to the same direction, smaller, smarter, and greener. In a single year, inference costs have fallen 10×–100×, largely due to the rise of SLMs and MoEs. The new frontier isn't infinite scale; it's intelligent composition.

The Human Dimension

From One Intelligence to Many Assistants

For users, this shift is invisible but transformative. The AI you talk to at work, the one in your car, and the one inside your phone may all run on completely different models, tuned to your context, latency, and privacy needs.

You no longer “use an AI.” You live in an ecosystem of coordinated intelligences. One listens, one sees, one acts, each optimised for its moment of utility. Your phone's SLM might transcribe a meeting offline while a LAM updates your CRM in real time and a MoE-powered system summarises the day's outcomes for your manager.

For designers and strategists, this demands a new mindset. You're no longer building the AI experience, you're orchestrating a network of model experiences that feel seamless to the human on the other side. Trust, transparency, and explainability will hinge not on a single model's accuracy but on how the whole ensemble behaves together.

The Takeaway

The End of Monolithic Intelligence

The age of the “one model to rule them all” is ending. What replaces it is subtler but far more powerful: an AI ecosystem defined by choice, specialisation, and orchestration.

Product leaders who treat AI as a toolbox, combining LLMs, LAMs, SLMs, and beyond, will move faster, spend less, and innovate more sustainably. Those who cling to the old model-centric mindset risk being out-engineered by teams that design with composition in mind.

In 2019, intelligence meant scale. In 2025, it means fit.

Tomorrow's AI advantage won't belong to those who own the biggest model, but to those who know which model to trust, when, and why.