The demo works.

The model answers fluently. The predictions look sharp. The dashboard shows promising accuracy. The board approves the next phase.

Then it moves into production.

Suddenly, outputs drift. Responses contradict known facts. Escalations increase. Confidence drops. The instinctive reaction is familiar: retrain the model. Upgrade the architecture. Try a bigger one.

But the problem was never the model.

It was the data.

AI Inherits Every Weakness in the Estate Beneath It

Across enterprise deployments, a consistent pattern is emerging. AI systems do not typically fail because they are unintelligent. They fail because they are fed unstable, fragmented, ambiguous, or poorly governed data.

Gartner forecasts that through 2026, organisations will abandon 60% of AI projects unsupported by “AI-ready data”. That is not a model problem. It is a data management problem.

The same research notes that 63% of organisations either lack, or are unsure they have, the right data management practices for AI. Even high-maturity AI teams report data availability and quality as persistent barriers.

This is the quiet crisis underneath AI enthusiasm.

Enterprises have spent years modernising applications. They have not modernised the data conditions those applications produce.

AI simply makes the weakness visible.

What’s Really Breaking

In pilots, data is curated. Engineers manually patch inconsistencies. Edge cases are handled by hand. The model appears to perform.

Production removes that buffer.

Data arrives late. Schemas shift without warning. Fields are redefined. Ownership is unclear. Integration points fail. Systems that were loosely connected become tightly coupled through AI inference.

Research into production ML systems describes “hidden technical debt” in machine learning, a compounding burden caused by unstable upstream data dependencies. Another body of work defines “data cascades”: downstream failures triggered by upstream data issues, reported as prevalent across high-stakes AI deployments.

These are not theoretical abstractions. They show up in named cases.

Amazon’s recruiting AI was trained on historical resume data that reflected gender imbalance, embedding bias into its outputs. The issue was not model sophistication. It was the data’s historical encoding of inequality.

In healthcare, external validation of a widely deployed sepsis prediction model revealed materially worse real-world performance than reported in development. Training-serving skew, differences between training data and live data, degraded effectiveness.

Zillow’s iBuying initiative absorbed a $304 million write-down after forecasting models misjudged market volatility. The algorithm did what it was trained to do. The world changed faster than the data assumptions embedded in it.

Each case follows the same arc: the model reflects the data estate. When that estate is fragmented, biased, stale, or volatile, AI amplifies the flaw.

Fragmentation, Ownership, and the Illusion of Control

Modern enterprises often run hundreds of applications. Salesforce reports that the average enterprise operates 897 applications, with only 29% connected, and leaders estimate 19% of data is “trapped”.

That fragmentation is survivable when humans manually reconcile discrepancies. It is not survivable when AI must reason across systems in real time.

Schema instability compounds the issue. A small upstream change, a renamed column, a revised definition, can silently distort downstream predictions. Production ML research highlights training-serving skew as a major source of errors. The model remains unchanged. The data semantics shift.

Ownership ambiguity further magnifies risk. Gartner notes that while leaders agree data quality is critical, they often do not consider it their responsibility. When outputs degrade, blame flows toward “the AI” rather than the upstream data producers.

This cultural reflex slows remediation. Teams retrain models instead of fixing data pipelines. They tweak prompts instead of enforcing contracts.

The result is compounding data debt.

Why It Matters Now

In 2024, many AI systems were advisory. They generated content, summarised information, assisted workflows.

In 2026, AI increasingly makes decisions.

It routes customers. Flags fraud. Approves transactions. Recommends treatments. Allocates resources.

When AI steps into decision loops, data errors become business risks.

Regulatory pressure reflects this reality. The EU AI Act requires training, validation and testing datasets to be relevant, representative, and as free of errors as possible. NIST’s AI Risk Management Framework emphasises documented consideration of data collection, representativeness, and suitability.

Data governance is no longer optimisation. It is compliance infrastructure.

Boards increasingly ask whether AI outputs can be trusted. Trust depends not on model size, but on upstream reliability.

The uncomfortable truth is this: most organisations have more AI ambition than data discipline.

The Strategic Reframe

AI success is not a model race. It is a data reliability challenge.

Organisations that treat data as a product, with clear ownership, versioning, lineage, and monitoring, create conditions where AI can operate continuously.

Those that treat data as exhaust from applications accumulate debt.

The shift requires more than better pipelines. It requires architectural and behavioural change:

- Data contracts that prevent silent schema changes.

- Real-time observability across pipelines and inference.

- Clear domain ownership of datasets.

- Version control and lineage tracking.

- Governance embedded in workflows rather than layered afterward.

McKinsey notes that scaling GenAI requires improving source data, investing in automated evaluation, and strengthening lineage and cataloguing practices.

This is not glamorous work. It does not produce immediate headlines. But it determines whether AI initiatives survive year two.

The Human Dimension

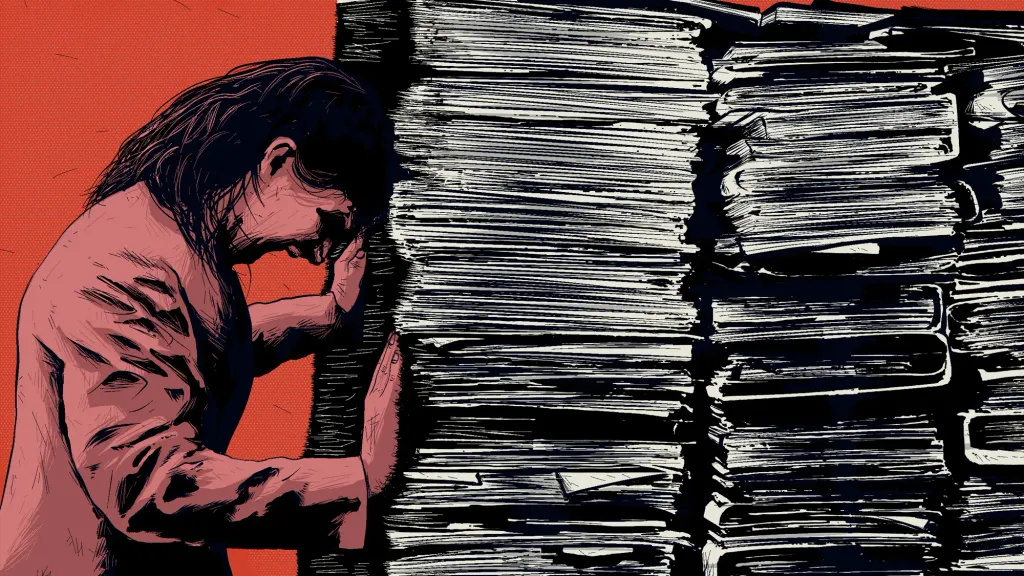

For CIOs and CDOs, the data debt crisis is as much organisational as technical.

Data engineering often lacks the visible prestige of model experimentation. Teams celebrate innovation sprints more than semantic audits. Yet production reliability depends on the latter.

If you have ever watched a model degrade inexplicably after deployment, you have seen data debt surface.

If you have ever struggled to answer, “which system owns this field?”, you have felt its cost.

The temptation is to fix what is visible, the model output. The harder task is to repair what is upstream.

AI makes data quality culturally unavoidable. When outputs become autonomous, excuses disappear.

Your customers do not care whether the error originated in a CRM sync or a schema migration. They see the AI’s mistake.

Trust erodes quickly.

What Happens Next

The data debt crisis will not slow AI adoption. It will shape its maturity.

Enterprises that confront data conditions early will compound advantage. They will experience fewer cascading failures. They will retrain less frequently. They will scale faster with fewer surprises.

Those that defer data governance will find AI initiatives repeatedly stalling in production.

The lesson is straightforward but demanding.

Fix the estate before you fix the model.

AI does not create new weaknesses.

It reveals the ones you have been living with all along.